Advances in the overlapping spheres of engineering and computing have been increasingly driven by developments in highly specialised fields such as computer vision (where computers replace the human eye), visualization (computer graphics, animation, and virtual reality), machine learning (where systems improve automatically) and the software and systems strategies associated with them. Practical applications have multiple input streams containing data that must be analysed, combined, and interpreted. A key challenge is ensuring that the data processing burden is not so high that the system cannot be utilized in real-time. Research at the CVIC within the University of Huddersfield (UoH) developed techniques that improved the speed of data processing, which were applied practically in the field of visual and immersive computing.

The work focused on three interlinked strands of expertise. New algorithms and techniques for real-time CCTV analysis; smart factory automation based on machine vision; and improvements in data handling techniques in Augmented Reality (AR) product design used in health products for the visually impaired.

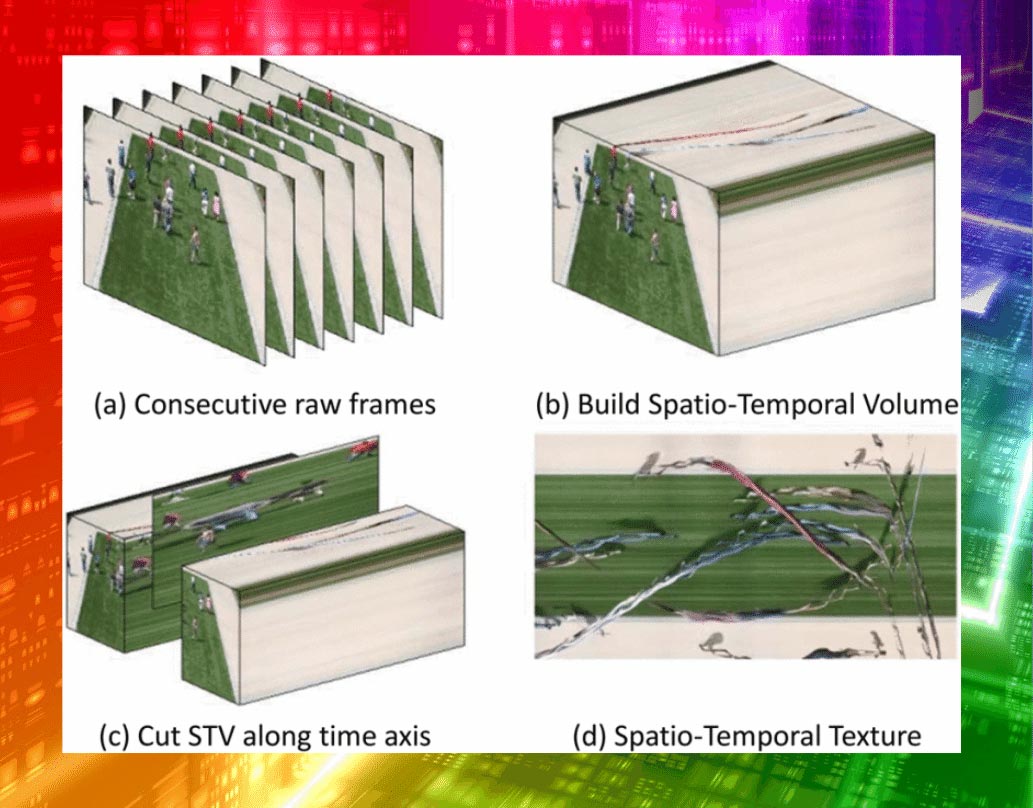

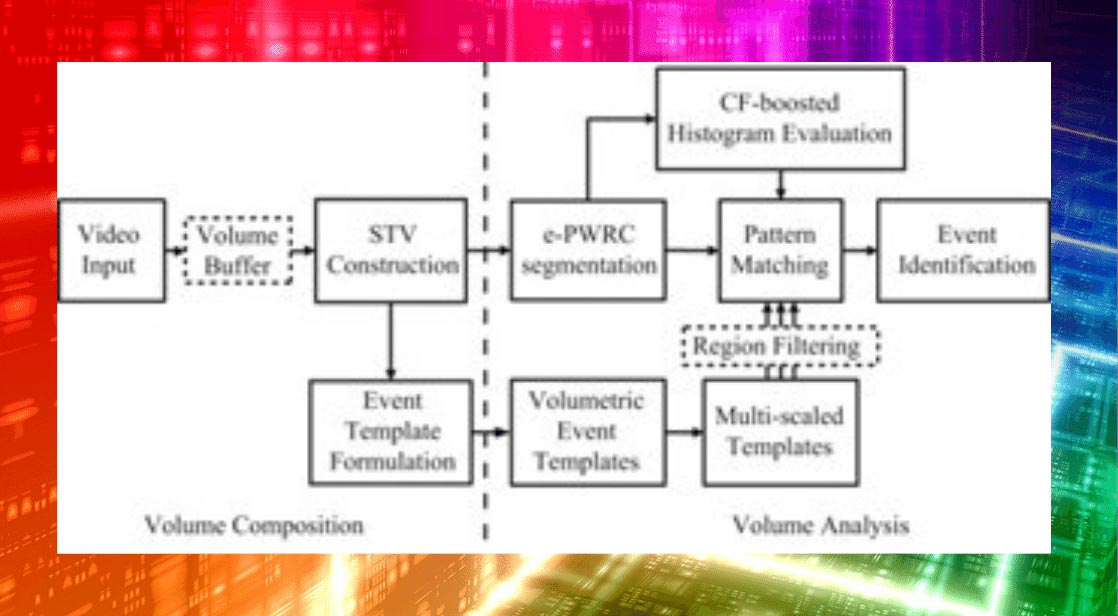

Devising new algorithms and techniques for real-time CCTV analysis

When implemented, the model automatically updated and recognized changes in a subject’s behavior, motion, and context (environment) by applying a feature map fast vector coding scheme (which ensures features in images can rapidly be identified). The new model used advancements in Artificial Neural Networks (created via so-called deep learning) combined with UoH-developed heterogeneous scalable neural networks (again designed to accelerate data analysis) called the Treble Stream Semantic Network. These were used to resolve the long-standing challenge of accurate delicate (subtle) feature (individual item of interest) selection. It was used to process and classify complex crowd scenarios and enabled the recognition of changes in the behavior of an individual relative to their surroundings.

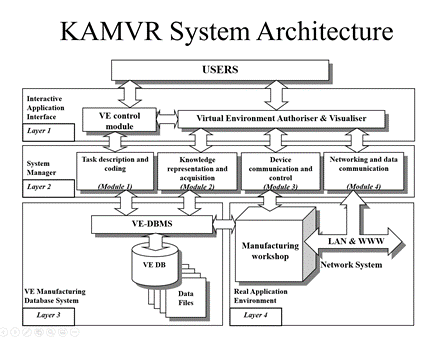

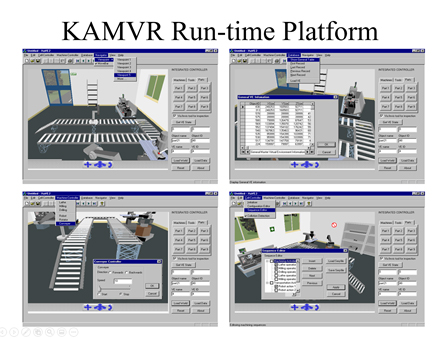

Developing smart factory automation based on information visualization and machine vision

The research explored the whole manufacturing process from design to implementation on the shopfloor. It used visualization techniques such as single view and stereo vision to improve the performance of robotics equipment, such as visual alignment for robot guidance (which improved accuracy in part placement), 3D detection (which enabled a robot arm to pick up part) and parts measurement (used for quality control). Parallel computing hardware acceleration strategies (based on adapting commercially available ‘gaming’ graphics cards) were devised. These transformed algorithm-acceleration approaches, originally developed for the software arena, to the computer hardware. Consequently, the algorithms ran faster. The faster online processing thus delivered, meant it was possible to incorporate deep learning capability (i.e., an artificial neural network trained model that runs in real-time).

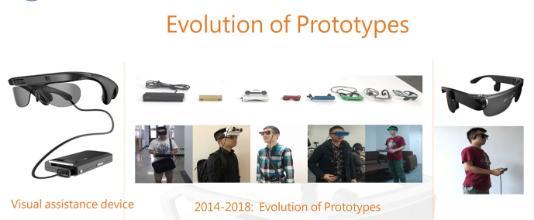

Deploying Intellectual Property in Augmented Reality (AR) product design for global visually impaired population

The research team developed algorithms described in 8 international patents, which improved i. the integrity of data supplied from multiple sensors (e.g. IR, UV, ultra sound etc.) and provided better signal quality for signal fusion (2016), and ii. an open-source scene recognition programming API, that was made available for programmers to exploit on the IOS and Android platforms. It has transformed practice in the challenging domain of real-time scene recognition by synthesizing data from multiple-input sources in real-time.